Data Index standalone service

Data Index service deployment

Data Index service can be deployed referencing directly a distributed Data Index image. There are different images provided that take into account what persistence layer is required in each case. In each distribution, there are some properties to configure things like the connection with the database or the communication with other services. The goal is to configure the container to allow to process ProcessInstances and Jobs events that incorporate their related data, to index and store that in the database and finally, to provide the Data Index GraphQL endpoint to consume it.

Data Index distributions

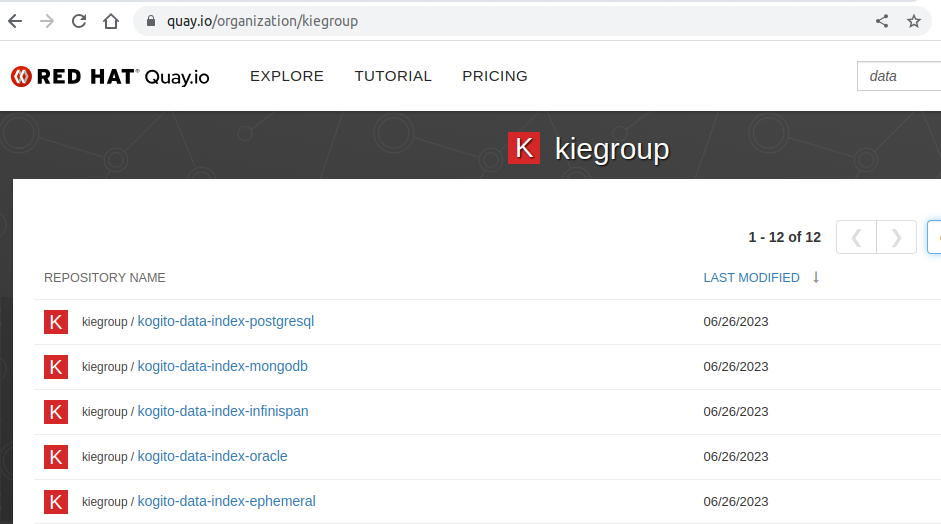

Data Index service can be deployed referencing directly a distributed Data Index image. Here there are the different Data Index image distributions that can be found in Quay.io/kiegroup:

Data Index standalone service deployment

There are several ways to deploy the Data Index service. But there are some common points in all the deployments:

-

Reference the right Data Index image to match with the type of Database that will store the indexed data.

-

Provide the database connection properties, to allow data index store the indexed data. Data Index service does not initialize its database schema automatically. To initialize the database schema, you need to enable Flyway migration by setting QUARKUS_FLYWAY_MIGRATE_AT_START=true.

-

Define the

KOGITO_DATA_INDEX_QUARKUS_PROFILEto set the way that the events will be connected (by default:kafka-event-support).

|

You must prepare the OpenShift Serverless Logic workflow to support the full communication with an external Data Index service. For this purpose, it is important to make sure the following addons are included: OpenShift Serverless Logic workflow addon dependencies to support the connection with external Data Index

|

Data Index deployment resource example using Kafka eventing:

Here you can see in example, how the Data Index resource definition can be deployed as part of a docker-compose definition

DataIndex resource in a docker-compose deployment using Kafka eventing: data-index:

container_name: data-index

image: quay.io/kiegroup/kogito-data-index-postgresql-nightly:main-2024-02-09 (1)

ports:

- "8180:8080"

depends_on:

postgres:

condition: service_healthy

volumes:

- ./../target/classes/META-INF/resources/persistence/protobuf:/home/kogito/data/protobufs/

environment:

QUARKUS_DATASOURCE_JDBC_URL: "jdbc:postgresql://postgres:5432/kogito" (2)

QUARKUS_DATASOURCE_USERNAME: kogito-user

QUARKUS_DATASOURCE_PASSWORD: kogito-pass

QUARKUS_HTTP_CORS_ORIGINS: "/.*/"

KOGITO_DATA_INDEX_QUARKUS_PROFILE: kafka-events-support (3)

QUARKUS_FLYWAY_MIGRATE_AT_START: "true" (4)

QUARKUS_HIBERNATE_ORM_DATABASE_GENERATION: update| 1 | Reference the right Data Index image to match with the type of Database, in this case quay.io/kiegroup/kogito-data-index-postgresql-nightly:main-2024-02-09 |

| 2 | Provide the database connection properties. |

| 3 | When KOGITO_DATA_INDEX_QUARKUS_PROFILE is not present, the Data Index is configured to use Kafka eventing. |

| 4 | To initialize the database schema at start using flyway. |

When Kafka eventing is used, workflow applications need to be configured to send the events to the Kafka topic kogito-processinstances-events allowing Data Index service to consume the generated events.

In this case Data Index is ready to consume the events sent to the topics: kogito-processinstances-events and kogito-jobs-events.

|

It is important to configure the workflows application to send the events to the topic To explore the specific configuration to add to the workflow to connect with Data Index using Kafka eventing see Data Index Kafka eventing Example of configuration in OpenShift Serverless Logic application passed in application.properties to configure connection with Data Index using Kafka connector:

|

|

Usually, when using docker-compose the workflow application generates the container image that is added to docker-compose. If the Kafka eventing configuration values weren’t there before the container image creation, they need to be passed as environment variables. More details about customizing Quarkus generated images can be found in Quarkus Container Images Customizing guide. |

Data Index deployment resource example using Knative eventing:

This deployment definition resource shows how the Data Index service configured and deployed can connect with an existing PostgreSQL database and to consume Knative events.

DataIndex resource with PostgreSQL persistence and Knative eventing in a kubernetes environment :apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/name: data-index-service-postgresql

app.kubernetes.io/version: 2.0.0-SNAPSHOT

name: data-index-service-postgresql

spec:

ports:

- name: http

port: 80

targetPort: 8080

selector:

app.kubernetes.io/name: data-index-service-postgresql

app.kubernetes.io/version: 2.0.0-SNAPSHOT

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/name: data-index-service-postgresql

app.kubernetes.io/version: 2.0.0-SNAPSHOT

name: data-index-service-postgresql

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: data-index-service-postgresql

app.kubernetes.io/version: 2.0.0-SNAPSHOT

template:

metadata:

labels:

app.kubernetes.io/name: data-index-service-postgresql

app.kubernetes.io/version: 2.0.0-SNAPSHOT

spec:

containers:

- name: data-index-service-postgresql

image: quay.io/kiegroup/kogito-data-index-postgresql-nightly:main-2024-02-09 (1)

imagePullPolicy: Always

ports:

- containerPort: 8080

name: http

protocol: TCP

env:

- name: KUBERNETES_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: QUARKUS_DATASOURCE_USERNAME (2)

value: postgres

- name: QUARKUS_DATASOURCE_PASSWORD

value: pass

- name: QUARKUS_DATASOURCE_JDBC_URL

value: jdbc:postgresql://newsletter-postgres:5432/postgres?currentSchema=data-index-service

- name: QUARKUS_DATASOURCE_DB_KIND

value: postgresql

- name: QUARKUS_HIBERNATE_ORM_DATABASE_GENERATION

value: update

- name: QUARKUS_KAFKA_HEALTH_ENABLE

value: "false"

- name: QUARKUS_HTTP_CORS

value: "true"

- name: QUARKUS_HTTP_CORS_ORIGINS

value: /.*/

- name: QUARKUS_FLYWAY_MIGRATE_AT_START (4)

value: "true"

- name: KOGITO_DATA_INDEX_QUARKUS_PROFILE (3)

value: "http-events-support"

- name: QUARKUS_HTTP_PORT

value: "8080"

---

apiVersion: eventing.knative.dev/v1

kind: Trigger (5)

metadata:

name: data-index-service-postgresql-processes-trigger

spec:

broker: default

filter:

attributes:

type: ProcessInstanceEvent (6)

subscriber:

ref:

apiVersion: v1

kind: Service

name: data-index-service-postgresql

uri: /processes (7)

---

apiVersion: eventing.knative.dev/v1

kind: Trigger (5)

metadata:

name: data-index-service-postgresql-jobs-trigger

spec:

broker: default

filter:

attributes:

type: JobEvent (6)

subscriber:

ref:

apiVersion: v1

kind: Service

name: data-index-service-postgresql

uri: /jobs (7)| 1 | Reference the right Data Index image to match with the type of Database, in this case quay.io/kiegroup/kogito-data-index-postgresql-nightly:main-2024-02-09 |

| 2 | Provide the database connection properties |

| 3 | KOGITO_DATA_INDEX_QUARKUS_PROFILE: http-events-support to use the http-connector with Knative eventing. |

| 4 | To initialize the database schema at start using flyway |

| 5 | Trigger definition to filter the events that arrive to the Sink and pass them to Data Index |

| 6 | Type of event to filter |

| 7 | The URI where the Data Index service is expecting to consume those types of events. |

This deployment is using KOGITO_DATA_INDEX_QUARKUS_PROFILE: http-events-support. Workflow applications need to configure the connector to use quarkus-http and send the events to the Knative K_SINK.

You can find more information about Knative eventing and K_SINK environment variable in Consuming and producing events on Knative Eventing in Quarkus

To explore the specific configuration to add to the workflow to connect with Data Index using Knative eventing see Data Index Knative eventing

application.properties file to send events to Data Index using Knative eventingmp.messaging.outgoing.kogito-processinstances-events.connector=quarkus-http

mp.messaging.outgoing.kogito-processinstances-events.url=${K_SINK}

mp.messaging.outgoing.kogito-processinstances-events.method=POST|

If that configuration values weren’t there before the container image creation, they need to be passed as environment variables. More details about customizing Quarkus generated images can be found in Quarkus Container Images Customizing guide. |

A full example where the Data Index service standalone is deployed using Knative eventing can be found as part of Quarkus Workflow Project with standalone services guide.

Found an issue?

If you find an issue or any misleading information, please feel free to report it here. We really appreciate it!